Week 20 - 120 HN release, Ollama and Anthropic integration

Jul 13th, 2025

This week marks a significant milestone for 120 AI Chat with the launch of local LLMs supported through Ollama, Anthropic integration, and the debut of our second native application - 120HN (Hacker News client). We've also refined core Markdown rendering components to ensure consistent, professional presentation across all conversation types.

New application launch: 120 HN

This week we've also released 120 HN, a fully native Hacker News client that demonstrates our commitment to creating fast, responsive macOS applications. This new app shares the same design philosophy and component architecture as 120 AI Chat.

Native performance: Built from scratch with native macOS components, 120 HN delivers smooth scrolling and responsive interactions that feel natural within the Mac ecosystem.

Clean interface design: The app features a minimal, distraction-free interface that prioritizes content readability while maintaining the visual consistency users expect from professional Mac applications.

AI integration foundation: 120 HN includes built-in AI assistant capabilities using OpenAI API keys, with planned support for Claude and local LLMs. This creates a unique workflow where users can discuss Hacker News content directly with AI assistance.

Cross-platform roadmap: While macOS version is available now, Windows and Linux versions are in development, extending our native application approach to additional platforms.

120 HN represents our broader commitment to creating purpose-built native applications rather than web-based solutions. Every component is implemented specifically for optimal performance and user experience on each target platform.

Local LLMs support through Ollama

120 AI Chat now supports local language models through Ollama integration, providing users with complete privacy and offline capabilities. This implementation allows users to run conversations entirely on their Mac without sending data to external services.

We've integrated support for Mistral, Llama 3.2, Gemma 3 and Deepseek R1, giving users access to powerful local AI capabilities. Each model offers different strengths for various conversation types, from coding assistance to creative writing.

Local LLM support addresses enterprise and privacy-conscious users who need AI assistance without cloud dependencies. Conversations remain completely local while maintaining the same intuitive 120 AI Chat interface.

Anthropic API integration

We've implemented official Anthropic integration, bringing Claude Opus 4 and Claude Sonnet 4 directly into 120 AI Chat. This expansion significantly broadens the AI capabilities available to users within our native macOS interface.

Users can now switch between different AI models based on their specific needs - from Claude's reasoning capabilities to local models' privacy advantages - all within the same familiar 120 AI Chat experience.

The model selection integrates with our existing conversation system, allowing users to change AI providers mid-conversation while maintaining context and chat history.

Markdown rendering optimization

We've significantly enhanced 120 AI Chat's markdown rendering system with improved heading components that maintain visual hierarchy regardless of conversation window size. The new implementation ensures that AI-generated documentation, code explanations, and structured responses display consistently whether users are working in a compact sidebar view or full-screen conversation mode.

Heading consistency: All heading levels (H1-H6) now scale appropriately within 120 AI Chat's conversation panels, preventing oversized headings from disrupting conversation flow while maintaining clear information hierarchy. This is particularly important for technical discussions where AI responses often include structured documentation.

Modern table styling

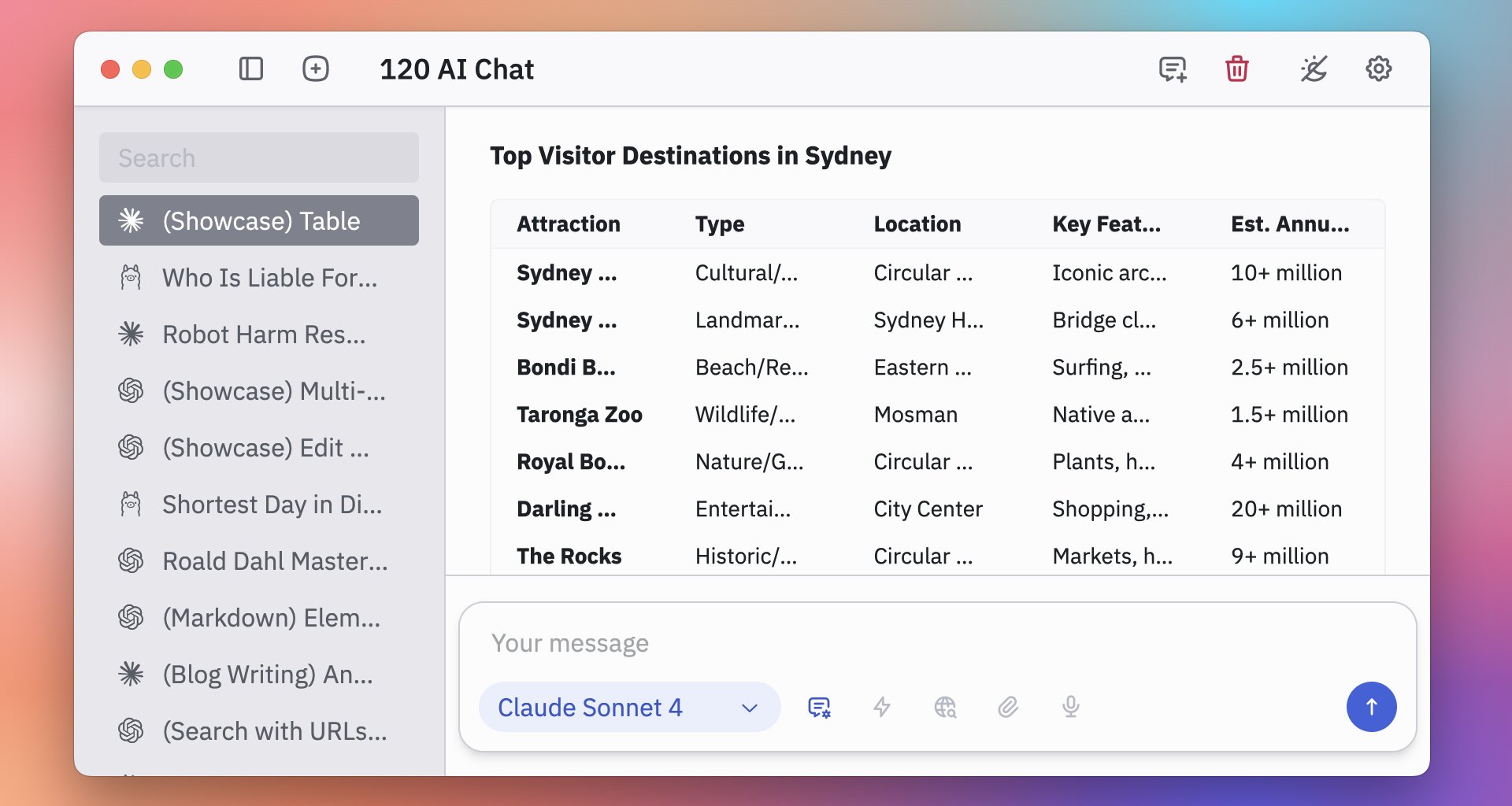

We've redesigned table rendering to provide a clean, professional appearance that matches modern macOS design standards. AI-generated tables now display with improved spacing, subtle borders, and enhanced typography that makes data comparison and analysis more comfortable during extended chat sessions.

Readability focus: The new table styling prioritizes data comprehension with proper contrast ratios, consistent padding, and clear row separation that works effectively in both light and dark modes.

Tips: Design system foundation with Radix UI

We've adopted Radix UI's color system as our design foundation, chosen specifically for their clear guidance on color purpose and context. Their thoughtful palette recommendations help us maintain accessibility standards while ensuring visual consistency across all 120 AI Chat components.

Looking forward

Next week, we're focusing on multi-threaded feature for 120 AI Chat and gathering user feedback on both applications. While there are continuing improvements with 120 AI Chat, our main focus now into exploring native table components which will also help developing 120 Table app.

Stay tuned as we continue refining native experience at 120.dev!