Week 21 - Multi-threaded conversations release with Gemma 3 added

Jul 20th, 2025

This week brings major version releases for both 120 AI Chat and 120 HN, featuring multi-threaded conversation capabilities and significant user experience improvements. Our focus has been on creating more natural workflows while maintaining the responsive, native macOS experience our users expect.

120 AI Chat v0.3.0: Multi-threaded conversations

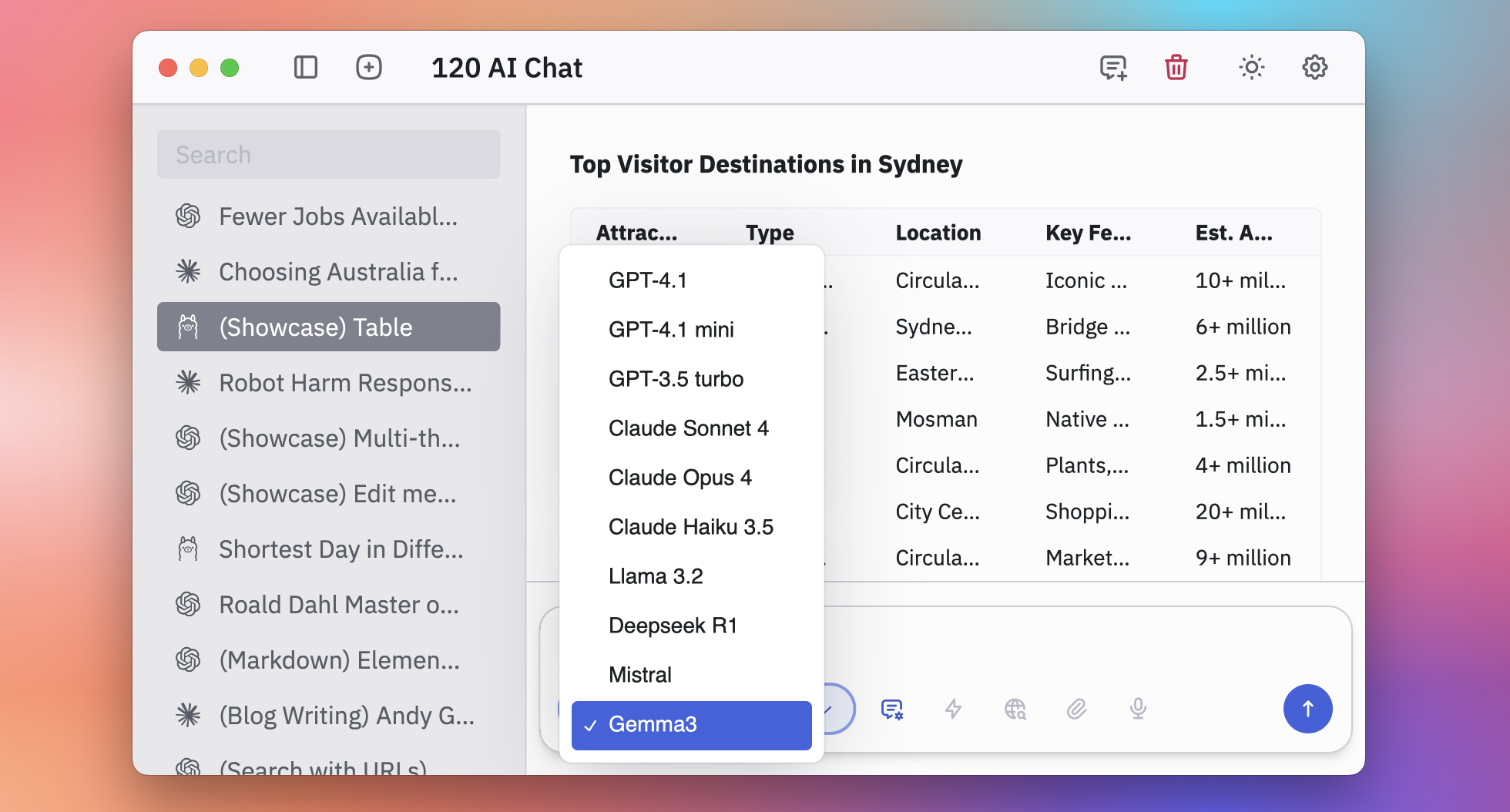

We've launched one of the most significant features in 120 AI Chat's development: multi-threaded conversations that allow users to maintain the same chat context while generating responses from different AI models. This breakthrough enables direct model comparison without losing conversation history or starting separate sessions.

Users can now ask the same question to Claude Opus 4, local Llama models, and other integrated AI systems within a single conversation. This creates unprecedented insight into how different models approach identical problems, from creative writing to technical troubleshooting.

This system also helps users understand model strengths and develop preferences for different types of tasks.

The threading system maintains full conversation context across all models, ensuring that follow-up questions and clarifications work seamlessly regardless of which model generated the previous response.

Automatic scroll behavior

120 AI Chat now automatically scrolls to follow AI response generation in real-time, keeping users focused on new content as it appears. This eliminates the manual scrolling required in previous versions and creates a more natural conversation rhythm.

Gemma 3

We've added Gemma 3 to our local LLM integration through Ollama, expanding the range of local AI options available to users. Gemma 3 offers competitive performance for many tasks while running entirely on user hardware.

Model diversity benefits: With Mistral, Llama 3.2, Deepseek R1, and now Gemma 3, users have access to models optimized for different use cases - from coding assistance to creative writing - all within the same native interface.

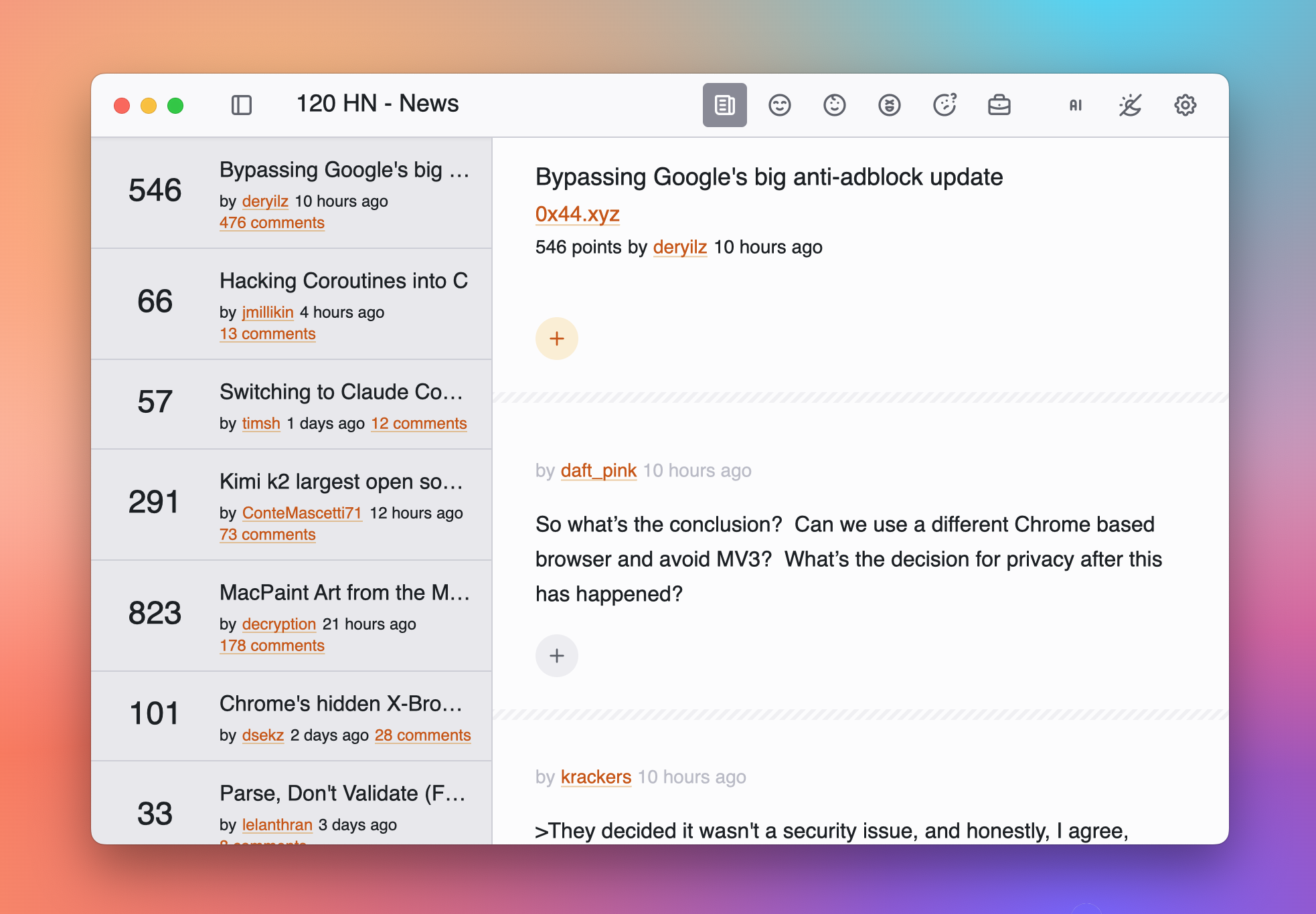

120 HN v0.2.1: Refined experience

120 HN now remembers user preferences across sessions, including window size, position, and sidebar state. This enhancement eliminates the daily friction of reconfiguring the interface and creates a more personalized experience.

We've also refined the AI summary generation system to produce more concise, relevant content that enhances Hacker News experience.

Summaries now use shorter paragraph formats with clearer structure, making them easier to scan during rapid news consumption. Each summary includes direct links back to relevant comments, maintaining connection to the original discussion.

The AI filtering focuses on the most substantive discussion points, helping users quickly identify valuable conversations within busy comment threads.

Looking forward

Next week, we'll start developing the prompt library and prompt management features for 120 AI Chat. We'll also begin building the window frame for the upcoming 120 Table app, applying insights gained from recent improvements to our table component. For 120 HN, we will focus on adding support for local LLMs.

We would like to express our heartfelt gratitude to all our early adopters for embracing our native apps from the very beginning. Your enthusiastic support and insightful feedback have played a crucial role in shaping the direction of our development and helping us make continual improvements. We sincerely appreciate the time and effort you've invested in sharing your experiences and suggestions, and we look forward to growing together as we continue to enhance our apps for everyone.