Why pro AI users should use APIs to optimize costs in 2025

Sep 28th, 2025

As artificial intelligence becomes integral to businesses, developers, and power users, managing costs is crucial. In 2025, with models like GPT-5, Claude 4.1, and Gemini 2.5 Pro handling everything from code generation to data analysis, expenses can add up quickly. While subscription-based interfaces (e.g., ChatGPT Plus or Claude Pro) offer convenience, APIs provide a more flexible, pay-per-use model that is often cheaper for high-volume users. This post explains why pro AI users—those integrating AI into apps, workflows, or large-scale projects—should switch to APIs, with comparisons, examples, and tips based on the latest pricing as of September 2025.

Understanding AI subscriptions vs. APIs

AI subscriptions are fixed monthly plans that give access to models through user-friendly web or app interfaces. For example:

- OpenAI's ChatGPT Plus: $20/month for priority access to GPT-4o, with message limits.

- ChatGPT Pro: $200/month for near-unlimited use of advanced models like o1 series.

- Anthropic's Claude Pro: $20/month for higher limits on Claude models.

- Claude Max: $100–$200/month for 5–20x higher limits, ideal for heavy individual use.

- Team Plans: Like OpenAI Team ($25–$30/user/month, min 2 users) or Claude Team ($30/user/month, min 5), which include collaboration features.

These are great for casual or moderate use but come with caps that can halt workflows for pros.

APIs, on the other hand, let you access models programmatically via code (e.g., Python SDKs). You pay only for what you use, measured in tokens (roughly 4 characters or 0.75 words per token). This pay-per-use model suits pro users building apps, automating tasks, or processing bulk data. Providers like OpenAI, Anthropic, Google, and xAI offer APIs with tiered pricing based on model intelligence and features like prompt caching.

Current API pricing landscape in 2025

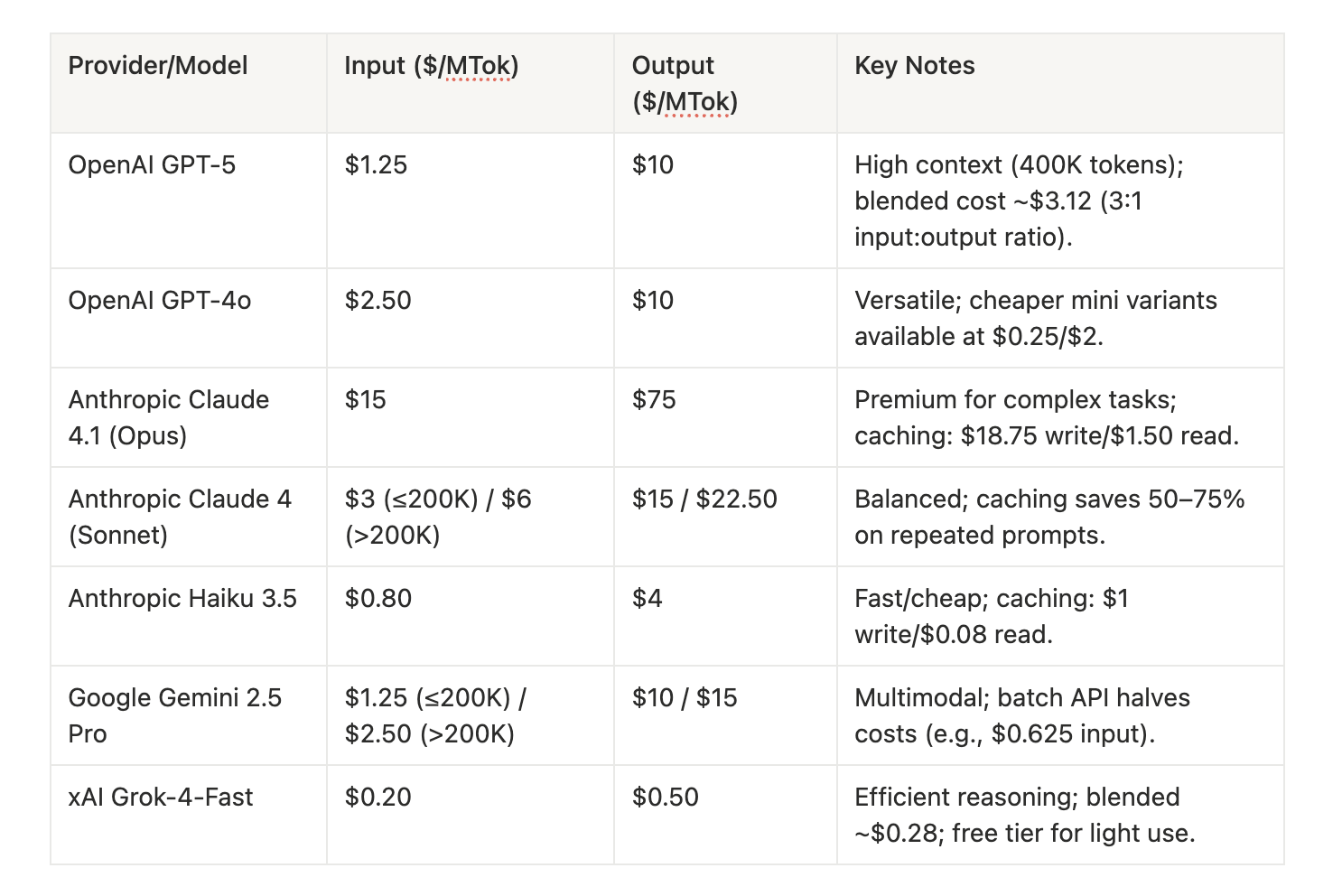

API costs vary by provider and model, with input (prompt) tokens often cheaper than output (response) tokens. Here's a comparison table of popular models' API pricing per million tokens (MTok), assuming standard rates without discounts:

Blended costs (assuming 3:1 input-to-output) make budget models like Grok-4-Fast ($0.28/MTok) or Haiku ($1.60/MTok) stand out for volume. Open-source alternatives (e.g., Llama 4 on hosts like CentML) can dip to $0.10/MTok, but require more setup.

Why APIs potimize costs for pro users

Subscriptions charge a flat fee regardless of usage, while APIs scale with your needs. For pro users (e.g., developers building chatbots or analysts processing reports), APIs are often 2–10x cheaper at scale. Here's the breakdown:

- Pay-Per-Use vs. Fixed Fees:

- A ChatGPT Plus subscriber pays $20/month even for light use, but hits limits (e.g., 80 messages/3 hours on GPT-4o). For API, if you process 1M tokens/month (about 750K words), GPT-4o costs ~$4.50 (blended), far below $20.

- Break-even: API becomes cheaper beyond 1.5–2M tokens/month for Plus-level access. For Pro ($200/month), it's around 20M tokens—common for apps handling customer queries.

- Scalability for High Volume:

- Example: A startup with a chatbot serving 10,000 daily interactions (avg. 500 tokens each) uses ~150M tokens/month. Via Claude Pro ($20/month), you'd exceed limits and need upgrades to Max ($200/month) or Team ($150+/month). API with Haiku: ~$180 (blended $1.20/MTok), but with caching, drop to $100–$120.

- Enterprise subs (e.g., OpenAI Enterprise ~$60/user/month, min 150 users) cost $9,000+/month fixed, vs. API's variable $5,000 for 500M tokens on GPT-5.

- Customization and Efficiency:

- APIs allow model routing: Use cheap models (e.g., Gemini Flash <$0.40/MTok output) for simple tasks, premium for complex. This can save 20–50% vs. one-model subs.

- Features like batch processing (Google: 50% off) or prompt caching (Anthropic: 75% savings on repeats) aren't available in subs.

- Hidden Subscription Costs:

- Subs often require add-ons for teams or advanced features, pushing costs up 2–3x. APIs integrate directly into your stack (e.g., via SDKs), avoiding interface overhead.

In short, for users exceeding 2–5M tokens/month, APIs cut costs by 30–70% while offering unlimited scaling.

Tips for optimizing API costs in 2025

- Monitor and Estimate: Use tools like OpenAI's usage dashboard or third-party calculators to track tokens. Aim for 3:1 input-output for blended estimates.

- Leverage Discounts: Enable caching for repetitive prompts (saves 50–75%), batch APIs (50% off on Google), or free credits (e.g., Anthropic's free tier for testing).

- Mix Models and Hosts: Route tasks—cheap for simple (Haiku $1.60 blended), premium for complex. For open-source, compare hosts (e.g., Llama on CentML $0.10/MTok vs. AWS $0.71).

- Start Small: Test with free API tiers, then scale. Integrate monitoring tools ($500–2,000/month) to save 20–30% via optimization.

Conclusion: Make the switch for smarter spending

For pro AI users, APIs aren't just about flexibility—they're a cost-optimization powerhouse in 2025. While subscriptions suit beginners, the pay-per-use model, combined with features like caching and batching, can slash expenses by half or more at scale. Review your usage, compare providers using resources like Artificial Analysis, and integrate APIs to future-proof your AI strategy.

If you're building with multiple models, tools like 120 AI Chat can help test APIs and LLMs' responses side by side without extra costs.