How to run ChatGPT, Claude, and other AI models side by side with 120 AI Chat

Oct 5th, 2025

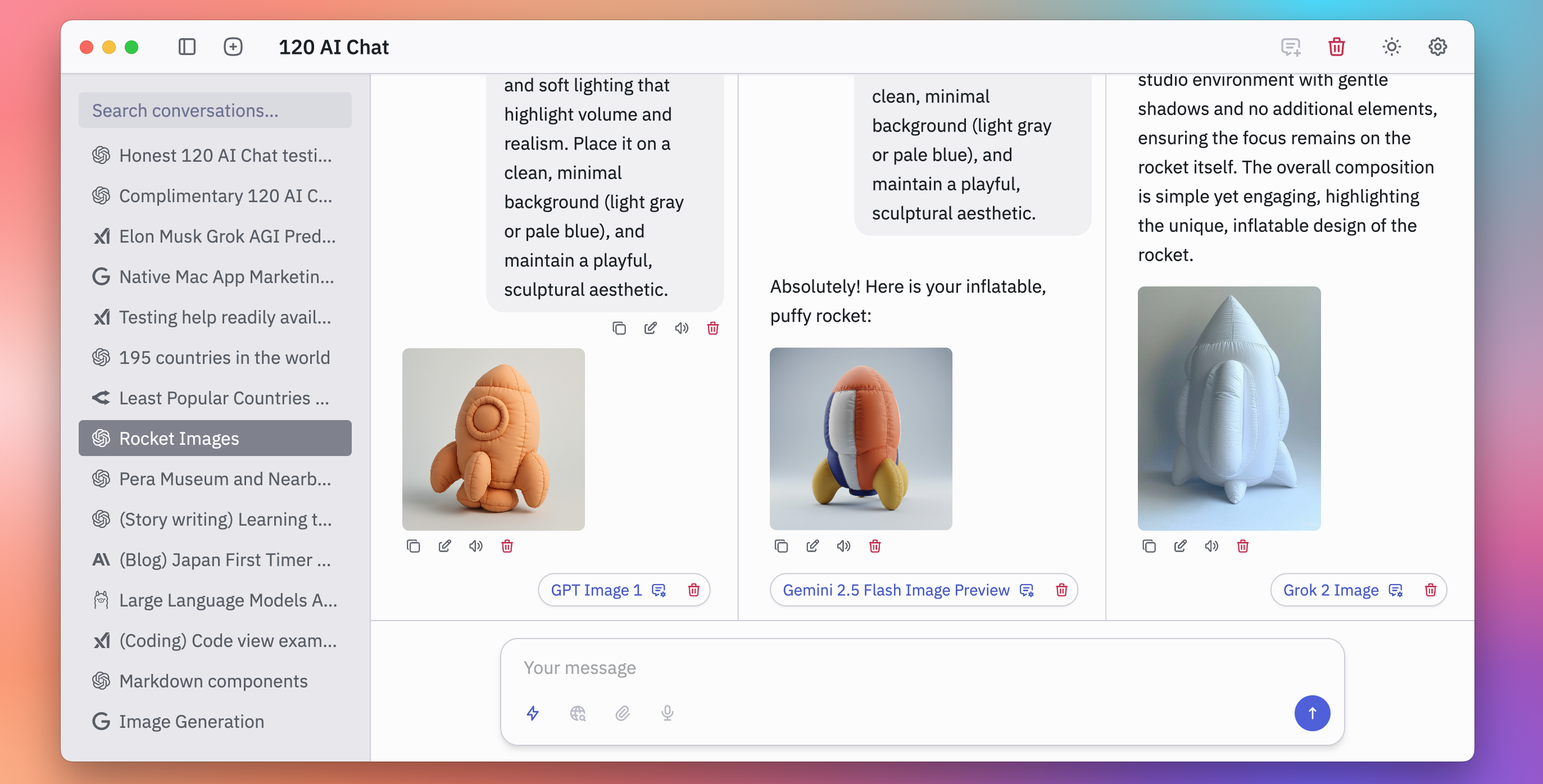

In today AI-driven world, having access to multiple large language models (LLMs) like ChatGPT from OpenAI, Claude from Anthropic, Gemini from Google, and Grok from xAI can supercharge your productivity. But switching between different apps or browser tabs can be a hassle. That is where tools like 120 AI Chat come in—a native desktop app that lets you run these models side by side in one seamless interface. As of September 2025, 120 AI Chat supports over 50 AI models, making it an excellent choice for developers, researchers, and everyday users who want to compare responses, multitask, or dive deep into complex queries without the friction. In this guide, I will walk you through why running models side by side is useful, how to set it up in 120 AI Chat, and real-world examples of its multi-thread chat feature. Whether you are brainstorming ideas, coding, or researching, this approach can make you more efficient and insightful. Let's get started!

Why run AI models side by side?

No single AI model is perfect—each has unique strengths. For instance:

- ChatGPT (e.g., GPT-5) excels at creative writing, quick brainstorming, and broad knowledge tasks but can sometimes hallucinate facts.

- Claude (e.g., Claude 4.1) shines in logical reasoning, ethical considerations, and in-depth analysis, making it great for research or coding without errors.

- Other models like Gemini or Grok offer multimodal capabilities (e.g., handling images) or a more humorous, real-time search-integrated style.

By running them side by side, you can:

- Compare answers instantly: Ask the same question to multiple models and spot differences, biases, or complementary insights. This reduces errors and gives a more balanced view—perfect for fact-checking or decision-making.

- Boost productivity: Handle parallel workflows, like using one model for drafting content while another analyzes data. No more tab-switching; everything's in one app.

- Enhance creativity and research: Combine outputs (e.g., ChatGPT's ideas refined by Claude's precision) to iterate faster.

- Save time and costs: Test free tiers or your own API keys across models without separate subscriptions.

In short, it's like having a team of AI experts at your desk, collaborating in real-time. Users report up to 2x faster task completion when comparing models, as it minimizes back-and-forth revisions.

Step-by-step guide: Setting up 120 AI Chat

120 AI Chat is a native app for Mac, Windows, and Linux, optimized for speed (up to 120FPS for smooth interactions). It's free for personal use with your own API keys, or $39 for a commercial license with full features. Here's how to get started:

-

Download and Install:

- Head to the official site: (120.dev)[https://120.dev/120-ai-chat].

- Choose your platform (Mac, Windows, or Linux) and download the installer.

- Run the setup — it's straightforward and takes under a minute. No bloatware or ads.

-

Add Your API Keys:

- Launch the app and go to Settings > API Providers.

- For ChatGPT: Sign up for an OpenAI API key at (OpenAI)[https://platform.openai.com/account/api-keys] and paste it in.

- For Claude: Get an Anthropic API key from (Anthropic)[https://console.anthropic.com] and add it.

- Repeat for other models like Gemini (Google AI Studio), Grok (xAI), or local LLMs via Ollama/LM Studio.

- Tip: Start with free or low-cost tiers like Gemini 2.5, GPT-3.5 or Claude Haiku to test. The app supports model controls like temperature (for creativity) and output length.

-

Create Your First Multi-Thread Chat:

- Click "New conversation" to start a chat.

- In the chat window, select a model from the dropdown (e.g., ChatGPT).

- To run side by side: Click "New thread" icon on the top of the window.

- Assign different models to each thread—e.g., Thread 1: ChatGPT, Thread 2: Claude.

- The UI lets you view threads in a split-screen or tabbed layout for easy comparison.

-

Customize for Your Workflow:

- Enable themes (e.g., Catppuccin or Nord) for a developer-friendly look.

- Use the Prompt Library to save reusable prompts, like "Compare pros/cons of X" for quick starts.

- Attach files (PDFs, images) for context-aware responses.

-

Advanced Setup Tips:

- For local models: Install Ollama or LM Studio separately, then connect via the app for offline use (great for privacy).

- Integrate web browsing or image generation: Enable these in settings for models that support them (e.g., DALL·E in ChatGPT, Stable Diffusion, FLUX-1, or Google Gemini Nano Banana).

Once set up, you're ready to multitask like a pro!

Additional benefits: Why this makes you more productive

Beyond comparison, 120 AI Chat's side-by-side setup amplifies efficiency:

- Time Savings: No app-hopping—handle queries in parallel, like fact-checking with one model while generating content with another.

- Better Decision-Making: Cross-model validation builds confidence in answers, especially for controversial or complex topics.

- Customization for Roles: Developers love the code themes and RAG; researchers appreciate file integrations; casual users enjoy voice input (coming soon) and image gen for fun tasks.

- Cost-Effective: Use free API tiers or local models to experiment without premium subscriptions.

- Privacy and Offline Mode: Local LLMs keep sensitive data on-device.

Overall, it's a productivity multiplier—turn one query into multifaceted insights without extra effort.

Final thoughts: Get started today

Running ChatGPT, Claude, and other models side by side with 120 AI Chat transforms how you interact with AI, making it more collaborative, efficient, and fun. Whether you're a developer debugging code, a researcher compiling data, or just someone exploring ideas, the multi-thread feature alone is worth trying. Download it from our site, grab your API keys, and start experimenting. If you have questions, the app's support team or docs are super helpful. Happy chatting!